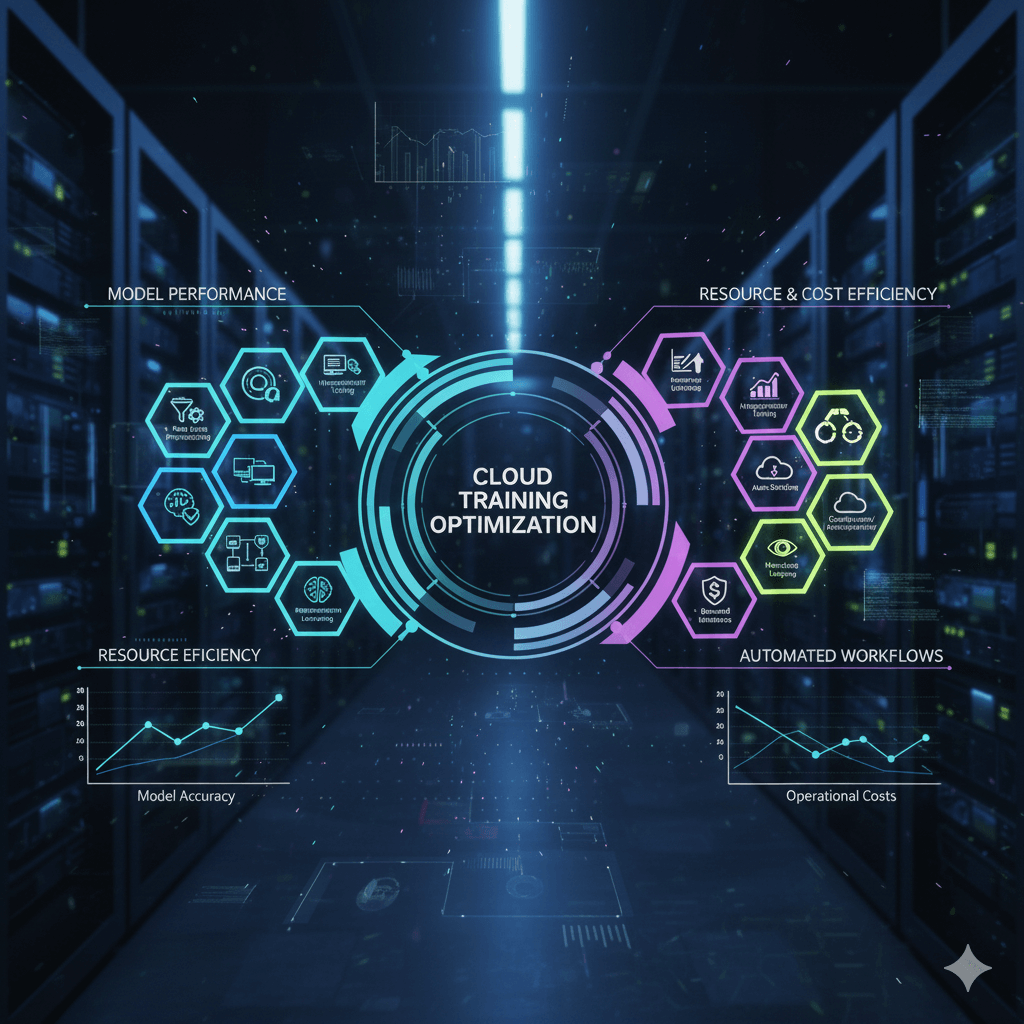

In today’s data-driven landscape, cloud training optimization is essential. This cloud training optimization article dives into advanced methodologies such as data preprocessing techniques, hyperparameter tuning strategies, and reinforcement learning principles. These elements are key to enhancing model performance and efficiency, allowing for the effective handling of vast datasets in cloud environments.

Understanding Cloud Training Optimization

Cloud training optimization is a critical aspect of deploying machine learning models efficiently and effectively. In the realm of machine learning, cloud training optimization typically requires vast computational resources and storage, making cloud computing a natural fit for these workloads. The significance of optimizing cloud training lies in the need to maximize resource utilization while reducing costs and training times. As organizations scale their machine learning efforts, they often encounter complex datasets that can introduce significant challenges, such as increasing training time and deteriorating model performance.

Cloud environments offer the ability to harness virtually limitless computing power, which facilitates large-scale machine learning tasks. However, this flexibility comes with its own set of challenges. For instance, balancing the resources allocated to cloud training optimization jobs can be cumbersome, especially when dealing with multiple concurrent tasks. Inefficient resource usage can lead to unnecessary operational costs. Moreover, data transfer speeds can become bottlenecks, especially when dealing with large datasets spread across various geographical locations. As a result, cloud training optimization strategies are essential to manage these intricacies effectively.

Additionally, the architecture of cloud platforms can influence the workflow in machine learning projects. For instance, utilizing serverless solutions can eliminate the need for manual server management, allowing data scientists to focus more on model development. However, understanding the implications of cloud architecture on data processing is vital, as it can affect the speed and efficiency of data ingestion, preprocessing, and training phases.

Key Benefits of Cloud Training Optimization

Another challenge that arises in cloud training optimization environments is data heterogeneity. Datasets often come from various sources, requiring uniformity in format, scale, and feature representation. This makes it necessary to implement preprocessing techniques rigorously before training begins. As data scientists aim for optimal performance, they must navigate issues like missing values, outliers, and variations in data types that can obscure the learning process.

One effective strategy in cloud training optimization is employing federated learning, where models are trained locally across multiple devices without needing to centralize data. This reduces the need for data transfer and helps in addressing privacy concerns. However, this method requires additional cloud training optimization techniques to ensure that the model benefits from diverse data distributions while maintaining efficiency.

Moreover, leveraging automated machine learning (AutoML) can serve as a valuable component in cloud training optimization. AutoML tools streamline various stages of the machine learning workflow, from preprocessing to hyperparameter tuning, thus alleviating manual burdens on data scientists and allowing them to attain superior results more rapidly.

How Cloud Training Optimization Works

In summary, cloud training optimization is pivotal for effective machine learning deployment. It serves as a bridge between the expansive capabilities of cloud infrastructure and the demands imposed by complex datasets. Emphasizing the importance of cloud training optimization strategies not only enhances model performance but also ensures sustainable operational practices in the long term. As cloud computing continues to evolve, staying attuned to the challenges and addressing them with advanced methods will be crucial for organizations looking to maximize the advantages in machine learning endeavors.

Data Preprocessing Techniques for Enhanced Model Performance

Data preprocessing is a critical step in the workflow of cloud-based machine learning, as it lays the foundation for model performance and the eventual success of machine learning initiatives. In the cloud environment, where enormous datasets can be processed simultaneously, effective data preprocessing becomes crucial to eliminate noise, enhance data quality, and ultimately deliver reliable insights.

One of the most essential techniques in data preprocessing is data cleaning. This process involves identifying and correcting inaccuracies or inconsistencies in the data. For instance, missing values can severely impact the training of machine learning models. Techniques such as mean imputation, where missing values are replaced with the mean of the available data, or more sophisticated methods like K-Nearest Neighbors (KNN) imputation can be employed to maintain data integrity. Software tools such as Python’s Pandas library facilitate these cleaning tasks by providing functions to easily manipulate and clean datasets in bulk.

Normalization and standardization are also pivotal in preparing data for cloud-based training. Normalization typically rescales the data to a defined range, often between 0 and 1, ensuring that each feature contributes equally to the model’s learning process. Conversely, standardization transforms the data to have a mean of zero and a standard deviation of one. This is crucial, especially when dealing with algorithms sensitive to magnitude, such as gradient descent-based approaches. Libraries like Scikit-learn offer built-in functions to streamline these processes, making them accessible regardless of the scale of the dataset.

Data transformation techniques further enable the extraction of meaningful patterns and relationships from the raw data. For example, feature engineering, which involves creating new features based on existing data, can significantly enhance model predictiveness. This could include polynomial transformations to account for non-linear relationships or applying logarithmic transformations to reduce the skewness in data distributions. Tools like FeatureTools provide automation capabilities for feature engineering, making it easier to handle complicated datasets commonly found in cloud environments.

Moreover, encoding categorical variables is essential in dealing with non-numerical data. Methods such as one-hot encoding and label encoding convert categorical data into numerical format, allowing algorithms to process them effectively. Failure to correctly encode these variables can lead to misleading results or reduced model performance. Libraries such as Scikit-learn again shine in this area by providing straightforward functions to convert categorical variables seamlessly.

The importance of these preprocessing techniques cannot be overstated. By systematically applying data cleaning, normalization, transformation, and encoding, one can eliminate noise and enhance the quality of the data fed into machine learning models. In the context of cloud training optimization, where datasets can be vast and complex, investing time and resources in effective preprocessing is indispensable. Tools tailored for preprocessing tasks can automate and facilitate these processes, ensuring that large-scale datasets are transformed into actionable insights for better decision-making.

Ultimately, the efficiency and effectiveness of machine learning models depend heavily on the data they are trained on. Advanced data preprocessing techniques serve as a lifeline by preparing datasets for optimal performance in cloud training optimization environments, ensuring that the models are not only accurate but also generalizable across a variety of real-world scenarios.

Hyperparameter Tuning and Its Impact on Model Accuracy

Hyperparameter tuning plays a pivotal role in the training of machine learning models, acting as a critical factor that influences model performance and generalization. Unlike model parameters, which are learned during training, hyperparameters are set before the training process begins. This distinction is essential because the correct configuration of hyperparameters can lead to substantial improvements in the accuracy and reliability of the resulting model.

Traditional hyperparameter tuning methods include grid search and random search. Grid search involves exhaustively evaluating a specified set of hyperparameter combinations, systematically covering the entirety of the predefined parameter space. While this method guarantees that the best hyperparameter set within the grid is identified, it can be computationally expensive and time-consuming, especially as the number of parameters—and their potential values—grows. Conversely, random search samples hyperparameter combinations randomly. This approach can be more efficient than grid search, particularly in high-dimensional spaces, as it can yield comparable results with fewer evaluations by avoiding some areas of the hyperparameter space that do not contribute meaningfully to model performance.

In recent years, more sophisticated techniques such as Bayesian optimization have gained traction. This method utilizes prior evaluations to inform future hyperparameter selections, treating the search for optimal combinations as a probabilistic model. By estimating the performance of various hyperparameter settings and updating beliefs about their effectiveness, Bayesian optimization can often identify superior configurations with fewer iterations than traditional methods. Utilizing surrogate models, typically Gaussian processes, this approach balances exploration of new hyperparameter combinations with the exploitation of previously evaluated combinations known to perform well.

The efficacy of hyperparameter tuning cannot be understated. Research shows that the difference in model performance with optimal hyperparameters compared to suboptimal settings can be substantial, potentially exceeding several percentage points in accuracy. This enhancement is especially critical in competitive environments, where even marginal gains can influence deployment and user satisfaction. Furthermore, appropriate hyperparameter tuning not only improves performance metrics on the training set but also enhances generalization on unseen data. This is fundamental for cloud applications, where models need to operate reliably in dynamic, real-world scenarios.

When conducting hyperparameter tuning, it is vital to employ robust validation techniques, such as k-fold cross-validation. These techniques provide assurances that the tuned model will perform well on unseen data, mitigating risks of overfitting that can arise from an overly optimistic performance evaluation based solely on training data. Another essential aspect is implementing automated hyperparameter tuning frameworks which can streamline the tuning process, incorporating techniques such as early stopping and parallel evaluations to optimize resource utilization in cloud environments.

As machine learning continues to evolve, the need for precision in hyperparameter tuning becomes increasingly important. By bridging traditional approaches with more advanced techniques, practitioners can significantly enhance model performance, ensuring that their machine learning solutions can adapt and excel in the demanding and variable landscape of cloud computing. The successful integration of these tuning strategies promotes an overall robust machine learning ecosystem capable of delivering high-stakes applications seamlessly and effectively.

Integrating Reinforcement Learning in Cloud Systems

Incorporating reinforcement learning (RL) into cloud systems can strategically enhance their operational efficiency by leveraging dynamic environments and adaptive decision-making. Reinforcement learning, fundamentally based on the principles of learning through interactions, empowers agents to improve their performance through a reward-based system. This approach is particularly suitable for optimizing cloud training processes, where complexities often arise from variable workloads, resource allocation, and changing user demands.

At its core, reinforcement learning involves an agent that acts within an environment and receives feedback in the form of rewards or penalties. This feedback mechanism drives the learning process, allowing the agent to adjust its actions to maximize cumulative rewards over time. In cloud systems, operators can employ RL agents to manage and allocate computational resources dynamically. For instance, when a cloud environment experiences spikes in demand, an RL agent can learn to allocate additional resources efficiently, ensuring optimal service delivery while minimizing costs.

One of the significant challenges in reinforcement learning is navigating the exploration-exploitation dilemma. Agents continuously face decisions that require them to balance between exploring new actions, which could yield better long-term rewards, and exploiting known actions that maximize immediate rewards. In the context of cloud training optimization, effective management of this dilemma is crucial. For example, when cloud resources are underutilized, an RL agent must decide whether to explore alternative configurations that could lead to performance improvements or exploit existing configurations that are already yielding satisfactory results. Mastering this balance is essential for enhancing the performance of machine learning models deployed in the cloud.

Real-world applications of reinforcement learning in cloud systems illustrate its potential in various scenarios. For example, in cloud resource management, RL can automate the scaling of virtual machines based on real-time analytics to match workload demands, thereby reducing latency and costs. Companies like Google and AWS have begun integrating RL techniques, enabling their systems to forecast resource utilization patterns accurately and adaptively provision resources according to AWS guidelines.

Another compelling application is in predictive maintenance and anomaly detection within cloud infrastructure. RL agents can learn from historical performance data and ongoing system metrics to identify potential failures or underperforming resources, optimizing troubleshooting and maintenance cycles. This proactive approach not only enhances system reliability but also reduces downtime, leading to improved overall service quality.

Furthermore, reinforcement learning can enhance the performance of training and inference processes in machine learning. An RL-based model can dynamically adjust hyperparameters during training, optimizing learning rates or regularization parameters in real time based on performance metrics. This dynamic tuning mechanism complements traditional hyperparameter tuning methods, enabling more responsive and adaptive training procedures.

Integrating reinforcement learning into cloud systems marks a significant step towards creating intelligent, self-optimizing environments. By harnessing the ability of agents to learn from interactions and apply that knowledge in real-time, organizations can not only optimize their cloud training processes but also enhance their overall operational efficiency. As machine learning continues to evolve, the role of reinforcement learning in cloud optimization will undoubtedly expand, presenting new opportunities for innovation and improvement in this field.

Conclusions

In conclusion, optimizing cloud training through effective data preprocessing, targeted hyperparameter tuning, and reinforcement learning techniques leads to substantial improvements in model accuracy and efficiency. By integrating these cloud training optimization strategies, organizations can significantly enhance their machine learning capabilities and achieve better outcomes in data analysis and decision-making. For further insights into cloud security practices, refer to the NIST Special Publication 800-144 on the security of cloud computing.